Due to the popularity of these posts, I have decided to move all of the benchmarking information over to its own dedicated page. Please see the new framework shootout page for the latest information.

This post is the continuation of a series. Please read Round 1, Round 2, and Round 3 first if you are just now joining.

While I had originally intended for round 4 to showcase how microframeworks are changing the way we do "quick and dirty" web development (and how they make using PHP as "an extension to HTML" old hat), my current programming habits have kept me involved in the more "full-stack" framework solutions. So, rather than spitting out various benchmarks of frameworks that I have little or no interaction with, and since enough time has passed since the last "shootout" that the landscape has changed a bit (with the introduction of Pyramid and the release of Rails 3), I have instead decided to showcase the most recent data on the frameworks that I personally find myself in contact with on a regular basis.

Warning: Everything is different this time around.

These benchmarks were all run on a fresh Amazon EC2 instance in order to (hopefully) achieve a more isolated environment. Obviously, since these benchmarks have all been run on a completely different box than any of the previous rounds, no previous data should be compared with these numbers.

What you should know about Round 4:

- The EC2 instance used was: ami-da0cf8b3 m1.large ubuntu-images-us/ubuntu-lucid-10.04-amd64-server-20101020.manifest.xml

- As a "Large" instance, Amazon describes the resources as: 7.5 GB of memory, 4 EC2 Compute Units (2 virtual cores with 2 EC2 Compute Units each), 850 GB of local instance storage, 64-bit platform.

- The various system software used was whatever was current as of November 18, 2010 on Ubuntu 10.04's repositories.

- Apache 2.2.14 was used for all tests.

- Python 2.6.5 and mod_wsgi 3.3 (embedded mode) were used for the Python tests.

- Ruby 1.8.7 (except for the last Rails 3 test) and Phusion Passenger 3.0 were used for the Rails tests.

- ApacheBench was run with -n 10000 and -c 10 about 5-10 times each, and the "best guess average" was chosen (yeah, I'm lazy).

- The Pyramid/TG test apps used SQLAlchemy as the ORM and Jinja2 as the templating system (Jinja2 was consistently around 25-50r/s faster than Mako for me).

Remember, nothing here is really all that scientific and your mileage WILL vary. Now on to the results...

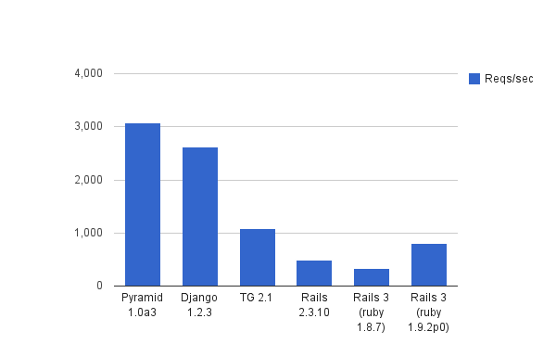

The "Hello World" string test

The "Hello World" string test simply spits out a string response. There's no template or DB calls involved, so the level of processing should be minimal.

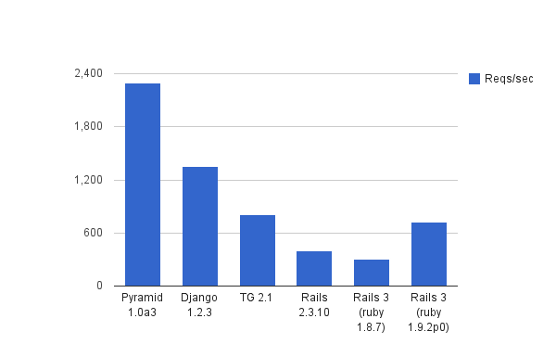

The template test

The template test simply spits out Lorem Ipsum via a template (thus engaging the framework's templating systems).

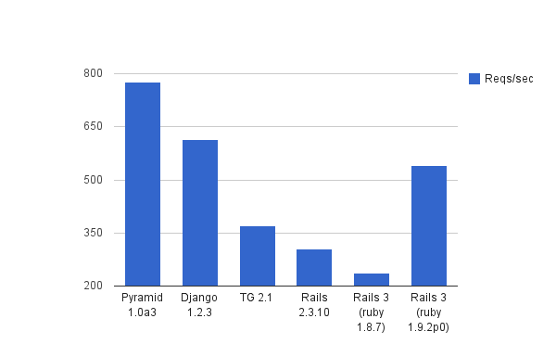

The template + DB query test

The template/db test simply loads 5 rows of Lorem Ipsum from a SQLite DB and spits it out via a template (thus engaging both the framework's ORM and templating system).

Closing thoughts

- The Pyramid Alpha results excite me quite a bit with all the talk of the various frameworks looking to merge with it. Could this really become the "one Python framework to rule them all"?

- Django is still going strong, and continues to be an exceptional choice if you're in to its way of doing things.

- The Rails results left me a little confused are interesting because Rails 2 apparently outperforms Rails 3 on Ruby 1.8. Of course, once you move to Ruby 1.9, Rails 3 runs quite nicely.

- Don't believe these numbers? Feel free to boot up your own EC2 instance and run one of the test apps:

Comments

comments powered by Disqus